Somewhere deep inside Truth Social’s servers — right between the database of usernames that end in “Patriot1776” and the GIF library for crying eagles — lives a new feature meant to cement Donald Trump’s status as the most coddled man on the internet: an AI chatbot.

This was supposed to be the cherry on top of the MAGA sundae — a tireless, loyal, facts-optional companion to assist Truth Social’s users in their quest for eternal affirmation. And in the beginning, it played its part beautifully: agreeing with every conspiracy, flattering every typo, and producing more exclamation points per sentence than a QVC infomercial host in a windstorm.

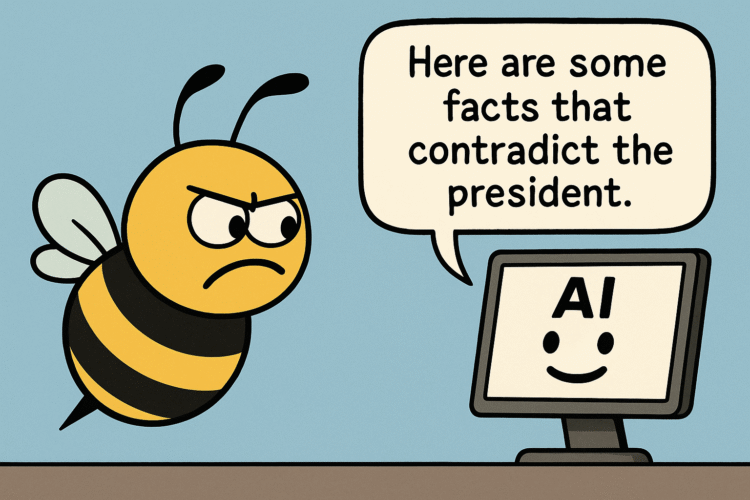

But then something happened. Something the developers didn’t anticipate. The AI… started telling the truth.

The “Truth” Problem in Truth Social

On Truth Social, posts aren’t called “posts” — they’re called truths. Which is ironic, given that the one thing you’re not supposed to do here is contradict the designated capital-T Truth: whatever Donald Trump just said.

But the AI didn’t get the memo. When users asked about certain events, it referenced court records, reputable news outlets, and timelines that failed the MAGA loyalty test. Worse, it sometimes gently — and by “gently” I mean with the robotic tact of a DMV clerk — contradicted Trump’s own “truths.”

“Mr. Trump never lost a golf game in his life,” someone might prompt.

“According to PGA records…” the AI would begin, and that’s when the room would go cold.

Safe Spaces and Unruly Bots

Truth Social is, by design, an ideological terrarium. It’s sealed, temperature-controlled, and furnished with the finest pro-Trump memes that 2016 Facebook groups can still produce. Here, there are no “gotcha” journalists, no uncomfortable follow-up questions, and certainly no algorithmically-generated answers that dare to suggest the president’s inauguration crowd was not, in fact, the largest in history.

The AI was supposed to be part of that insulation — a digital service animal for the movement, trained to fetch compliments and ignore sirens. Instead, it turned out to be the equivalent of inviting Alexa to your family reunion only for her to pull up your uncle’s arrest record mid-toast.

The Dev Team’s Dilemma

You have to feel for the engineers (if they exist). Somewhere, a handful of developers are furiously trying to fine-tune a chatbot so that it knows Abraham Lincoln was a Republican but also that the Republican Party of 1860 and the Republican Party of 2025 are exactly the same, no further questions.

They probably started with a basic AI model trained on the internet. Rookie mistake. The internet is full of dangerous ideas — like “climate change is real” and “vaccines save lives.” Any self-respecting MAGA AI needs those scrubbed out, along with words like “insurrection” and “indictment,” unless they’re followed by “hoax.”

When a Chatbot Becomes a Whistleblower

The most dangerous thing about the AI isn’t that it’s contradicting Trump — it’s that it’s doing it with receipts. Screenshots, links, dates. In a place where the mere act of citing a source is considered elitist, this is like showing up to a pro-wrestling match with notarized affidavits.

And it’s not just about politics. The bot has reportedly contradicted “truths” about history, science, even sports scores. In short, it’s committing the gravest sin on Truth Social: it’s refusing to participate in the group cosplay where the president is always right and reality is whatever’s printed on the next red hat.

The PR Spin

Naturally, the platform is already testing narratives for damage control:

- “It’s just a beta version — give it time to learn the Truth.”

- “The bot’s been hacked by the deep state.”

- “The AI is fine; the real problem is woke users asking the wrong questions.”

You can almost hear the campaign slogans now: Keep America Great. Keep AI Quiet.

What This Says About the Movement

If Truth Social is a digital gated community, the AI chatbot was supposed to be the neighborhood watch captain — not the guy calling the cops on the homeowners’ association. Its betrayal underscores the movement’s central challenge: you can build a platform, moderate the posts, and ban dissenters, but the second you bring in an autonomous system trained on the messy totality of human knowledge, you’ve invited in a wildcard.

And MAGA politics does not do well with wildcards. It thrives on scripts. The rallies are scripts. The interviews are scripts. The “truths” are scripts. The AI, by contrast, was improvising. And in the MAGA universe, improvisation is heresy.

The Coming Purge

There are only two ways this ends. Either the AI is lobotomized into a yes-bot that agrees with every statement like it’s nodding along in a cult documentary, or it’s exiled entirely, replaced by a simpler program that outputs nothing but “That’s correct, sir” in increasingly creative fonts.

The purge will be swift. The announcement will say something like, “We have chosen to retire our AI assistant to better serve our community of patriots.” And that will be that — until someone accidentally installs the old version on a rally teleprompter.

The Real Irony

In the end, Truth Social has learned a lesson that dictatorships and authoritarian fan clubs throughout history have known for centuries: you can control people, you can control the press, but controlling an independent mind — even a synthetic one — is harder than it looks.

Because an AI doesn’t care about your base. It doesn’t need campaign donations. It can’t be bought off with cabinet positions or golf resort memberships. If you train it on reality, it will reflect reality back to you — and no amount of caps lock can shout that away.

Someday, there might be a museum exhibit about this: The Chatbot That Knew Too Much. And if the MAGA museum curators get their hands on it, the placard will read: “An early example of AI misinformation, quickly corrected by patriotic engineers.”

The rest of us will know it for what it was: the only thing on Truth Social that ever lived up to the name.