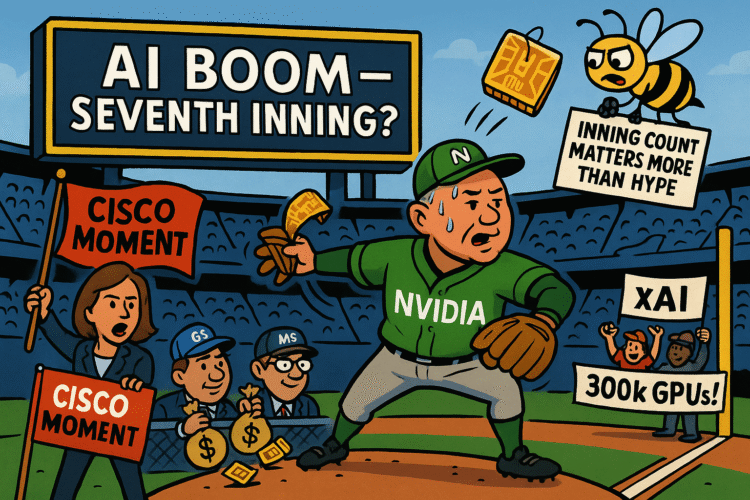

If the stock market were a baseball game, investors in Nvidia and the broader AI trade would be screaming from the bleachers, “Relax, we’re still warming up!” But Lisa Shalett of Morgan Stanley Wealth Management begs to differ. In an October 7 Fortune interview, she invoked a metaphor that landed like a bucket of ice water: we are not in the first inning, she warned, but “closer to the seventh.” Translation—this game is more than half over, the bullpen is stirring, and the rally you think just started might actually be cresting.

That’s not just a bad mood talking. It’s a red-flag inventory that reads like Cisco circa 1999.

The Anatomy of a Memo: Circular Money in Motion

The specific red light flashing over Wall Street right now isn’t simply valuation froth. It’s something subtler, uglier, and deeply familiar to veterans of the dot-com crash: vendor financing. Back then, Cisco juiced sales by lending money to its customers, who then used the money to buy Cisco gear, inflating revenues until reality snapped the loop.

Today, Nvidia isn’t cutting loans, but the rhyme is close enough to make you sweat. Reports show Nvidia has pledged as much as $100 billion to OpenAI in staged equity commitments—$10 billion chunks that value OpenAI at half a trillion dollars. Add another $5 billion check to Intel, and suddenly the world-beater is a world-bankroller.

Goldman Sachs analyst James Schneider put it bluntly: are we seeing “circular revenue”? Nvidia seeds its own customers with equity financing, and—surprise!—those customers buy back Nvidia GPUs in volume. It looks like growth, smells like growth, but may just be a recycling scheme with a tech gloss.

The Market Scoreboard

The numbers as of October 7 are impressive, if not intimidating. Nvidia shares are up 38% year-to-date, outpacing the S&P 500’s +15%. Bulls insist the rally is justified:

- A thaw with China could restore as much as $15 billion in Blackwell chip sales.

- Elon Musk’s xAI is building a Memphis supercomputer with ~300,000 Nvidia GPUs, worth around $18 billion.

- Hyperscalers are dumping cash into AI data centers at a speed that makes “capex discipline” sound like a dead language.

But beneath those headline catalysts, breadth is narrowing. Multiple expansion leans heavily on a handful of mega-customers. And those mega-customers? Their own downstream revenue models are still “pre-revenue,” a euphemism for “we’re demoing cool stuff, trust us the money will follow.”

Shalett’s Late-Cycle Checklist

Lisa Shalett’s Cisco comparison isn’t casual. She ticked through the same late-cycle tells that defined 1999:

- Buyer concentration risk: A handful of customers drive both receivables and future sales visibility. If even one sneezes, Nvidia catches pneumonia.

- Balance sheet engineering: Equity deals and mega-checks look a lot like subsidizing demand today at the expense of organic growth tomorrow.

- Hype-to-utility gap: Consumer and enterprise users aren’t yet paying for AI at scale, even as infrastructure spending explodes.

Her baseball metaphor stings because it’s visual. Everyone thinks we’re stretching, maybe bunting in the first inning. She’s saying the nacho trays are being cleaned out, the fans are restless, and the scoreboard is heading for extra innings no one budgeted for.

The Ghost of Cisco

John Chambers, Cisco’s ex-CEO, is already out there drawing lines between then and now. In 1999, Cisco looked untouchable. Its market cap ballooned, its routers defined the internet, and analysts called it the “backbone of the new economy.” Sound familiar?

Then vendor financing collapsed, buyer concentration turned into cancellations, and Cisco lost nearly 90% of its market value in the crash. Its cautionary tale isn’t just about excess—it’s about what happens when you confuse infrastructure demand with real end-user monetization.

The Counterarguments

To be fair, not everyone thinks the bubble’s about to pop. San Francisco Fed President Mary Daly says a pullback in AI stocks wouldn’t threaten financial stability. Some asset managers argue Nvidia’s customer concentration is manageable, pointing out that hyperscalers are not dot-com startups with no revenue but trillion-dollar giants with diversified businesses.

And then there’s the “AI super-cycle” camp: believers who insist we’re still in the early innings of a productivity revolution, and today’s spending is just the scaffolding for decades of growth. According to them, comparing Nvidia to Cisco is like comparing a space shuttle to a roller skate.

The Moving Parts Investors Can’t Ignore

Still, the similarities gnaw because they’re structural. Fortune’s piece laid out four specific risks that, if ignored, make “Cisco moment” more than just a metaphor:

- Circular revenue risk: If Nvidia’s equity-financed customers are booking GPU orders, then revenue is partly self-funded. The minute that loop ends, the air comes out.

- Policy and trade volatility: Tariff changes or export-control carve-outs can swing tens of billions in addressable sales. China is both lifeline and landmine.

- Supply-chain fragility: High-bandwidth memory (HBM) and substrate bottlenecks are today’s “scarcity premium,” but if orders slow, they flip to overhangs and glut.

- Historic rhyme: Vendor financing, channel stuffing, concentrated buyers—dot-com déjà vu with faster chips and flashier slides.

October’s Timeline in Miniature

- Late September–early October: Reports detail Nvidia’s $100 billion OpenAI commitment in $10 billion tranches, targeting capacity by 2H26. Intel’s $5 billion lifeline confirmed.

- October 7: Fortune publishes Shalett’s interview, where she says she’s “very concerned,” invoking the seventh-inning analogy.

- Same day: Syndications amplify the Cisco warning across financial media.

- October 7: Market closes with Nvidia still +38% year-to-date, S&P +15%. Bulls and bears sharpen knives.

The Investor Watchlist

If you want to separate durable growth from bubble air, you need receipts. Literally. Watch for:

- Disclosures on equity-linked customer deals: Who is getting Nvidia money to buy Nvidia chips?

- Receivables concentration and Days Sales Outstanding (DSO): Are cash flows getting stretched by mega-customers?

- Hyperscaler capex guidance vs. actual monetization: Are billions spent on GPUs translating to billions in revenue per user?

- China licensing outcomes: Does Blackwell’s potential $15 billion in sales survive trade restrictions?

- xAI purchase orders: Does Musk’s 300,000 GPU order land on time or slip into the vaporware file?

- Downstream revenue: Are AI workloads finally producing paid, margin-accretive products, or are we still in perpetual demo land?

Because the Fortune thesis is not “AI is fake.” The thesis is that the AI boom may already be in its late innings. And when the star pitcher starts financing his own ticket sales, you should probably check the scoreboard.

Closing: When the Inning Count Matters More than the Highlight Reel

AI is not Pets.com. Nvidia is not Enron. But enthusiasm cycles follow patterns, and the scariest thing about Shalett’s Cisco analogy is how specific the rhymes are. Late-cycle behavior, concentrated buyers, balance-sheet engineering, circular revenue, hype still waiting for monetization—that’s a bingo card you don’t want to fill.

The difference between a bubble and a revolution isn’t whether the technology is real—it’s whether the money sustains until the utility catches up. If the game really is in the seventh inning, then the market has a lot less time to figure that out than the hype reels suggest.

Investors may still be doing the wave in the stands, but the veterans are counting outs. And if the lesson of Cisco means anything, it’s that knowing the inning matters more than cheering the highlight.