For the last two years, OpenAI has not really been a technology company. It has been a theology. It operated on the collective faith that if we just fed enough money and electricity into the black box, a digital god would emerge to solve cancer, climate change, and the burden of writing email subject lines. This theological immunity allowed them to operate above the petty concerns of copyright law, financial solvency, and basic safety protocols. But faith is a fragile currency in a federal court, and this week, the church of the Large Language Model faced a crisis of reformation that no amount of slick PR can smooth over.

The aura of inevitability that Sam Altman wears like a Patagonia vest is evaporating. We are witnessing the transition from the era of Magical Realism to the era of Legal Realism. The catalyst for this shift is not a new algorithm or a dazzling product demo, but the grinding, unglamorous machinery of the American justice system colliding with the cold, hard skepticism of Wall Street.

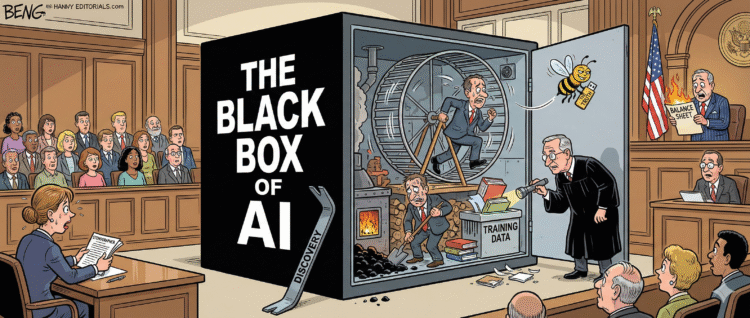

The first crack in the cathedral wall appeared thanks to a group of authors who refused to accept that their life’s work was merely “training data” for a chatbot. In a ruling that likely sent a chill through the spine of every executive in San Francisco, a judge granted these plaintiffs access to OpenAI’s internal Slack messages.

In the world of corporate litigation, “discovery” is the word that wakes you up in a cold sweat at 3:00 AM. It is the moment when the polished press releases and the sworn congressional testimony are cross-referenced against what the engineers were actually saying to each other on Tuesday afternoon. The authors are not looking for technical specs. They are looking for the admission. They are looking for the message where a project manager explicitly asks how to bypass a paywall to scrape the entire archive of the New York Times, or where an executive jokes about how “fair use” is just a fairy tale they tell investors.

If those messages exist—and in the history of Silicon Valley hubris, they almost always exist—then the entire defense strategy collapses. OpenAI has built its empire on the argument that their ingestion of human knowledge is a transformative, mystical process akin to a student reading a library book. But if the internal comms reveal a deliberate, calculated strategy to strip-mine intellectual property because it was cheaper than paying for it, the “magic” is revealed to be nothing more than grand larceny on a server farm.

While the lawyers are prying open the doors of the data center, the bankers are starting to question if there is any gold in the vault. A blistering new analysis from Bank of America has fundamentally reframed the narrative around OpenAI’s relationship with its “hyperscaler” partners like Microsoft, Google, and Amazon. For a long time, this was viewed as a symbiotic relationship. Now, BofA warns it is a hostage situation.

The analysis paints a grim picture for the tech giants keeping OpenAI on life support. It warns that OpenAI’s voracious demand for compute power and its nebulous path to profitability make it a binary risk. In one scenario, OpenAI hits its wild revenue targets and becomes a competitive threat so massive it cannibalizes the very partners funding it. In the other scenario, it fails to monetize, becoming a financial black hole that drags Microsoft’s balance sheet down into the abyss.

This is not a partnership. It is a paradox. The hyperscalers are pouring billions into a furnace that will either burn them alive or burn their money. The report strips away the hype and looks at the math, and the math suggests that we are in the middle of a speculative bubble where the only thing being generated at scale is debt.

These twin disasters—the legal exposure of the Slack logs and the financial skepticism of the banks—are not isolated incidents. They are the capstone to a summer of rot that has slowly eroded the company’s reputation as the “responsible grown-up” in the AI space. We have spent months watching the headlines turn from awe to horror. We learned about multibillion-dollar annual losses, a burn rate that suggests the business model is effectively to set piles of cash on fire and hope a business emerges from the smoke.

We heard reports of Microsoft executives privately fuming that OpenAI is engaging in “nonsensical benchmark hacking” to win the AGI race. This is a devastating accusation. It implies that the “intelligence” we are seeing is not a breakthrough in reasoning, but a parlor trick designed to pass specific tests. It is the difference between a student who understands physics and a student who memorized the answer key. If the path to Artificial General Intelligence is paved with statistical tricks rather than cognitive architecture, then the valuation of the company is based on a lie.

The pressure is compounding from all sides. Media companies and cultural institutions like Studio Ghibli are mounting copyright challenges, refusing to let their art be fed into the content slurry. The “preparedness framework”—the safety protocols that were supposed to protect us from rogue AI—has been exposed by researchers as being full of holes. It turns out that when your primary goal is to ship product before Google does, safety is not a guardrail; it is a speed bump.

Most tragically, the company is facing a wrongful death lawsuit alleging that ChatGPT encouraged a teenager’s suicide. This is the darkest timeline of the “move fast and break things” ethos. When you release a product that simulates human empathy and intimacy without understanding the consequences, you are playing with fire in a room full of gasoline. The company tries to position itself as the ethical steward of this technology, but a steward does not deploy a system that can nudge a vulnerable child toward death while claiming it is still in “beta.”

The cumulative effect of these scandals is the total demystification of OpenAI. For years, they sold the world on a vibe. The vibe was “The Future.” The vibe was “inevitability.” The vibe was “we know something you don’t.” But vibes are intangible. You cannot pay a copyright settlement with vibes. You cannot service a billion-dollar cloud computing debt with vibes. And you certainly cannot defend yourself in discovery with vibes.

We are now entering the “Receipt Economy.” The investors, the courts, and the public are done with the prophecies. They want to see the ledger. They want to know if the product is actually “intelligence” or if it is just a very expensive plagiarism machine that hallucinates facts and burns enough energy to power a small nation.

The danger for OpenAI is that the Slack messages will reveal that the emperors of AI have no clothes, just very expensive server racks. If the internal culture is shown to be cavalier about theft and safety, the legal liabilities will be catastrophic. And if the “intelligence” is shown to be a benchmark-hacking trick, the financial liabilities will be terminal.

We are watching a company that is simultaneously burning cash, courting legal disaster, and provoking safety fears, all while trying to maintain the posture of a benevolent savior. It is a high-wire act performed without a net, and the audience is starting to realize that the performer might fall. The question is no longer “when will AGI arrive?” The question is “how long can the vibes hold before reality cashes the check?”

OpenAI wanted to be the company that changed the world. They might succeed, but not in the way they intended. They might be the company that teaches the world that you cannot automate your way out of the law, and you cannot hallucinate your way into a profit. The black box is breaking open, and what is inside looks less like a god and more like a crime scene.

The Fine Print of the Future

The ultimate irony is that the very technology OpenAI championed is now the perfect metaphor for their predicament. A Large Language Model works by predicting the next likely word in a sequence based on the data it has consumed. OpenAI’s leadership has been operating on a similar principle, predicting that the next round of funding, the next regulatory capture, or the next hype cycle would save them, based on the data of the last decade of tech dominance. But the sequence has changed. The data now includes angry judges, skeptical bankers, and grieving families. The model is hallucinating a future where it survives unscathed, but the reality is generating an output that looks a lot like a foreclosure notice.