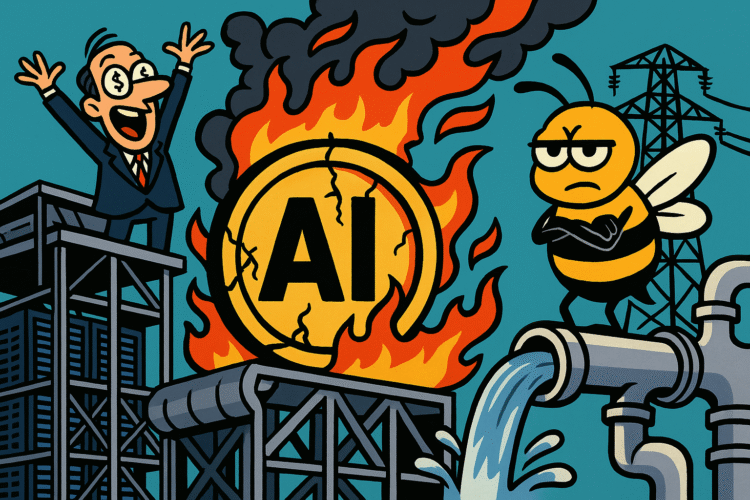

There is something unsettling about watching the world’s most hyped technology industry behave exactly like every other bubble in history. You know the pattern: vast promises, endless capital, faith in new magical substance, followed by negligence of the plumbing, then collective shock when the bills hit. With AI right now we are already deep into the middle of that tale. We have trillion-dollar market caps on a handful of firms, hundred-billion-dollar capex sprints for data centers in desert heat, power-purchase agreements that raid future electric grids, chip oligopolies funneling the spoils to a few vendors while hundreds of “me-too” startups torch runway chasing the same foundation model dream. The hype is loud. The math is quiet. And the plumbing is groaning.

Let’s get to work with the hard hat on and the ledger open. First the market caps: firms built on AI moats now trade at multiples that assume infinite growth. Investors believe everything from every consumer app to every industrial process will pass through a neural network. The chip industry is seen as the new oil. Data centers are seen as national infrastructure almost on par with highways. But how does this translate into actual economics? This is where the hazards appear. The upstream supply chain is concentrated. A few chip vendors, few foundries, few power grid integrators. Meanwhile the measure of success is not tokens passing through models but revenue per token versus cost per kilowatt hour and per chip wafer. When a foundation model runs millions of parameters at each inference, that is compute time, electricity, cooling, transmission latency, hardware depreciation. When you burn kilowatt hours for the sake of “innovation,” at some point the marginal cost outruns marginal revenue. That is what pops bubbles.

Right now we see enterprise pilots stalling at compliance reviews, ROI metrics unread, legal teams studying model liability, data-owners suing for royalties. That data center ROI slide means internal credit markets stop subsidizing negative gross margins. The bubble shows the same signs as dot-com in 2000, subprime in 2007. Except now the concentrated power is even more extreme. The few firms that win will own chips, cooling, power, cables, inference rooms — while the public gets the bills, the brownouts, the lag.

Take the plumbing: data-centers are hungry. They need water for cooling. They need transmission lines. They need transformers. Engineers say the bottleneck is not software — it is semiconductors, electricity, latency, cooling. These are physical limits. Heat, distance, speed, cost. Press releases do not change that. A model trained on billions of parameters needs power. That power comes from someplace. When you pile AI farms into zones with weak grids you get rate hikes, you get municipal bonds issued for substations instead of schools, you get cheap farmland turned into server barns. The risk is not simply idled CPUs — it is energy monopolies, water stress, zip codes turned into compute zones.

Now let’s stack winners and losers. Winners: upstream suppliers and hyperscalers who amortize chips, power, rights, risk. They capture the tolls on every inference because they own the infrastructure. They buy chips in bulk. They sign long power purchase deals. They get preferential rates. They rent compute. They squeeze everyone else. Losers: late-stage startups that assumed a foundation model would be a door to scale. Cloud-dependent midsize firms that build around rented intelligence only to see price hikes. Creative workers and data-owners whose labor is scraped and resold, communities that trade farmland and water for speculative server barns. The American public: you get higher utility bills, you get rate based subsidies for grid buildouts, you get fragile economy tied to a single volatile input called compute.

Consider the household math: your utility bill rises because data centers injected new demand and the cost is socialized. Your retirement fund is overweight a dozen AI names because the index put all its chips there. Your job is replaced by prompt piece-work because the “productivity boost” means fewer employees doing more work at lower wages. The expansion of AI promised more jobs. Instead we may get fewer jobs paying less and more jobs renting our data to someone else’s AI. The retail investor owns fear. The platform owns scale.

Now consider what pops the bubble. First, marginal cost per kilowatt exceeds token revenue. Second, the credit markets realize that negative gross margins cannot last. Third, regulatory intervention imposes rights, royalties, safety overhead on models trained on the commons. Fourth, physical constraints: power supply, latency, cooling. Fifth, enterprise use stalls – pilots do not convert. Sixth, geopolitical conflict spikes: if chip supply or power lines are disrupted the machine halts. Seventh, public backlash to energy consumption, data privacy, worker dislocation builds.

The internet boom built logistics and payments and still crashed. This one concentrates power, land, electricity far more tightly. The risk: when the music stops a few firms will own chips, cables, customers while the public owns the bills and the brownouts unless we demand a different path. That path includes rate-payer protections, open standards, shared compute, national public options for AI infrastructure, enforceable transparency.

Now anchor in the law and regulation. The market caps are based on predictions; but risk lies in model liability and royalties. If data-owners sue for royalties on scraped data the cost of training explodes. If regulators force model audits or safety fees the capex burns fruitless. If antitrust authorities say difference between rental compute and utility compute is monopoly the growth assumption falters. If utilities shift cost to public the user burden rises. If capital markets react to the engineering limits the valuation gap collapses.

What are the near-term checkpoints? First: cash flow versus capex at the giants. Are they still investing billions with no revenue component yet? Second: signed megawatts versus announced ones. Are the power purchase agreements delivered or merely hyped? Third: chip-supply growth versus inference demand. Are companies buying chips they cannot use? Fourth: enterprise renewals that actually pay for themselves versus pilots that stagnate. Fifth: model liability and royalty regime: are lawsuits emerging? Sixth: antitrust and grid approval processes: are regulators delaying the build? Seventh: price hikes in cloud compute: are rents going up? Eighth: sovereign supply constraints: are cost pressures from water, transformers, labor hitting buildouts?

We must state plainly: this is not innovation alone. This is speculation on a promise of infinite compute, infinite growth, infinite productivity. But compute is finite. Power is finite. Attention is finite. Capital markets will sooner or later ask for proof. Until then we must ask who is absorbing risk, who wins, who loses.

Here is the brutal truth: if the AI boom is real it should build accessible infrastructure, shared platforms, democratized compute, rather than centralized silos. If you are racing to build a monopoly on cognition you are not innovating. You are extracting. You are building toll booths on intelligence. You are turning the promise of a universal productivity boost into rent paid to the few who own the chips. You are recreating the scarcity you claimed to eliminate.

Innovation means access. Means participation. Means the many, not the few. When AI remains the province of those who can afford a server farm, the rest of us become consumers of intelligence rather than citizens with it. The public step becomes rent, not ownership. The economy becomes leased, not built. The future becomes something you buy, not something you shape.

If you trace the bubble you see patterns. In the 1990s dot-com you saw companies with no earnings valued billions. In 2007 subprime you saw home values divorced from incomes. Now you see AI companies with no profit but infinite promise. You see chip fabs raising billions based only on model hype. You see data centers built with rate payers’ money. You see talent being harvested from abroad, then compute rented back to them at higher cost. The cycle: promise, capital, hype, backlog, long tail of losses, consolidation, regret.

Yes we need AI. But not this version of AI. Not the version where the infrastructure is a gated fortress and the public picks up the cost. Not where the startup fails because pricing is rent. Not where the worker is replaced by an AI and the tiny few profit. Not where the grid is stressed, the water table drained, the farmland turned into server barns. Aside from machines we need policy. We need competition. We need guardrails. We need transparency of compute rents. We need open models. We need decentralization.

So here is what to watch. Are the giants turning profits? Are they signing power agreements delivering substations? Are the layoffs rising in startups that counted on cheap compute? Are the clouds raising rents? Are the public utilities issuing bond debt for AI buildouts? Are the regulators asking hard questions about rights and reuse? Are the data-owners paid? Are the workers replaced with prompt jobs? Are the grid brownouts increased? Are the classrooms emptying while server farms expand? Are retirees lost in an index overweight big-AI names?

Because if we don’t check this the next crash will be worse. The dot-com crash cost wealth. The subprime crash cost lives. The AI crash will cost littler firms, innovative workers, small countries, public resources, the future we hoped for. The few who own the chips and cables will bail out again. The many who rented them will keep the bills.

This is our moment. We can treat AI like liberation or we can treat it like toll extraction disguised as progress. If progress is only for the few we will wake up ten years from now with fewer jobs, weaker public services, higher bills, fragile power grids, and a handful of companies sitting on the hardware and saying they led the revolution. But the revolution was outsourced. And we missed it.

So yes applaud innovation. Yes support good AI. But also demand the logs. Demand transparency. Demand the cost accounting. Demand the public infrastructure. Demand the shared compute. Demand that the next wave of intelligence is not fenced behind a paywall. Because if you don’t, you will own none of it. The factories will be under new foreign roofs, the startups will be silent, the water rights sold, the power bills higher, and the public turned into the budget for someone else’s fortune.

When the music stops, remember this: we are not spectators. Our taxes, our utilities, our pensions, our towns, communities, futures all matter. We will either share in the intelligence economy or pay for it. If we do not insist we share then we will inherit the bills and not the benefits.

Yes the bubble is still inflating. But the better decision would be to stop treating compute like magic dust and treat it like infrastructure. Because the next burst will not flash. It will linger. It will underheat. It will underdeliver. And when you wake up you will realize you paid for the data centre, the servers, the cable lines, and you did not get the future you were promised.

We need different. We need AI that builds access, not profit. We need a shared pipeline, not a monopolised toll road. We need this moment to be a public upgrade, not a private extraction. Because if we don’t choose that path we will have built giant machines while losing the people. And that is the final irony: a revolution delivered by exclusion rather than inclusion.

You cannot scale intelligence forever, but you can scale fairness. And maybe that is the real infrastructure we should have built.